The smaller the loss, the closer its guesses are to the text.Įach training round, BabyGPT tries to improve its guesses by reducing this loss. A loss of zero would mean that its guesses always correctly matched the next letter. It then calculates a score, known as the “ loss,” which measures the difference between its predictions and the actual text. But - and this is a key to how a language model learns - BabyGPT keeps a score of exactly how bad its guesses are.Įvery round of training, it goes through the original text, a few words at a time, and compares its guesses for the next letter with what actually comes next.

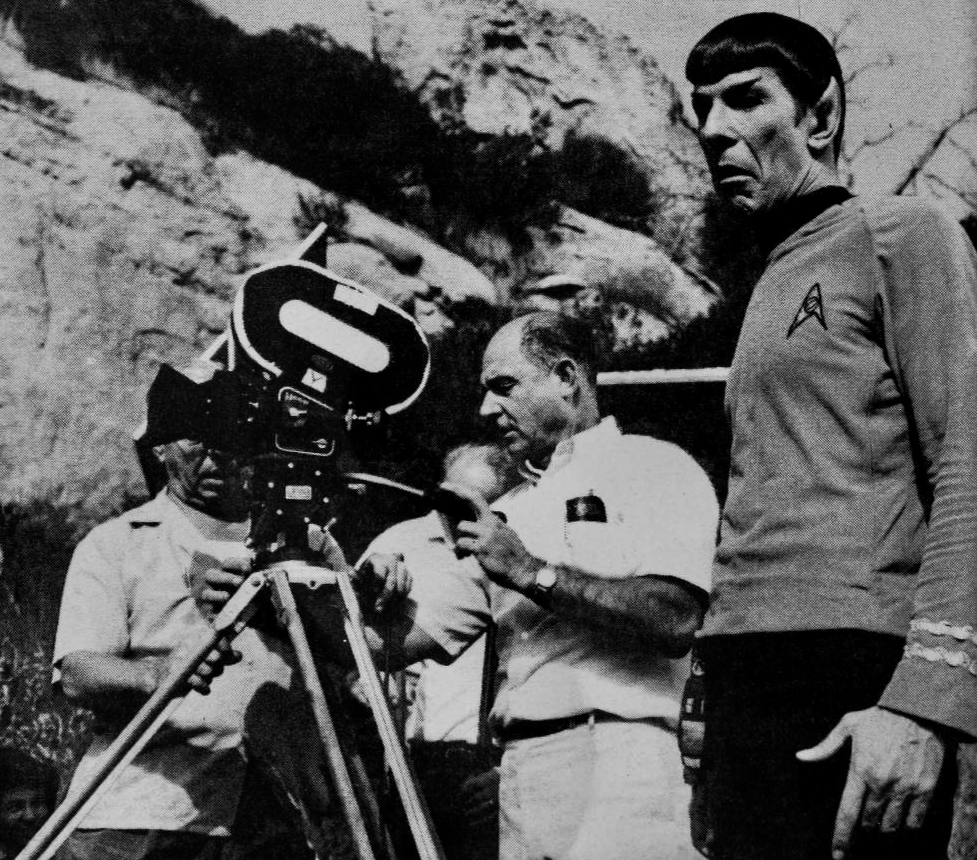

It has a tiny vocabulary, but that doesn’t stop it from inventing words like alingedimpe, ratlabus and mandiered. If you look closely, you’ll find that it has also learned some small words: I, to, the, you, and so on. You’ll see a lot of the letter “e” because that is the most common letter in English. In particular, our model has learned which letters are most frequently used in the text. They learn statistical patterns that piece words together into sentences and paragraphs.Īfter 250 rounds of training - about 30 seconds of processing on a modern laptop - BabyGPT has learned its ABCs and is starting to babble: Over many, many rounds of training, language models can learn to write. But they learn from their mistakes, and over time, their guesses get better. This is how language models usually start off: They guess randomly and produce gibberish. BabyGPT hasn’t yet learned which letters are typically used in English, or that words even exist. Initially, its guesses are completely random and include lots of special characters: '?kZhc,TK996') would make a great password, but it’s a far cry from anything resembling Jane Austen or Shakespeare. It makes its guesses one letter at a time, which makes it a bit easier for us to see what it’s learning. Unlike the larger models, which start their training with a large vocabulary, BabyGPT doesn’t yet know any words. We trained it for about an hour on a laptop on just a few megabytes of text - small enough to attach to an email. Their training costs millions of dollars and involves calculations that take weeks or even months on hundreds of specialized computers.īabyGPT is ant-sized in comparison. For more details on in-universe technical manuals, please see List of Star Trek technical manuals.The largest language models are trained on over a terabyte of internet text, containing hundreds of billions of words. This is a list of reference books on the subject(s) of Star Trek, which does not include fan-published works.

0 kommentar(er)

0 kommentar(er)